Such approaches deal with the question of what is shown in a given image. This became possible through algorithmic advances-as using rectified-linear units that avoid vanishing of the gradient during training ( Krizhevsky et al., 2012)-, as well as implementing convolutional and pooling layers that had originally been proposed long before ( Fukushima, 1980). Deep Neural Networks and in particular Convolutional Neuronal Networks have revolutionized the area of image classification during the last decade and are now the dominant approach for image classification leading to deeper and deeper architectures ( He et al., 2016). (2009)), the recent success of Deep Neural Networks in image related tasks ( Krizhevsky et al., 2012) has translated as well to the area of semantic segmentation. While approaches to semantic segmentation have been around for a long time (see review on more traditional approaches in Thoma (2016), or for example, He et al. On the other hand, it is required to label each pixel individually focusing on a very fine level of detail. As such, it is a challenging task that requires, on the one hand, to take into account the overall context of the image and for each pixel that of the surrounding area. It deals with recognizing which objects are shown and where exactly these are presented in the image. Semantic segmentation deals with the task of assigning each pixel in a given image to one of potentially multiple classes. A brief section will provide a comparison of the approaches followed by the conclusion.

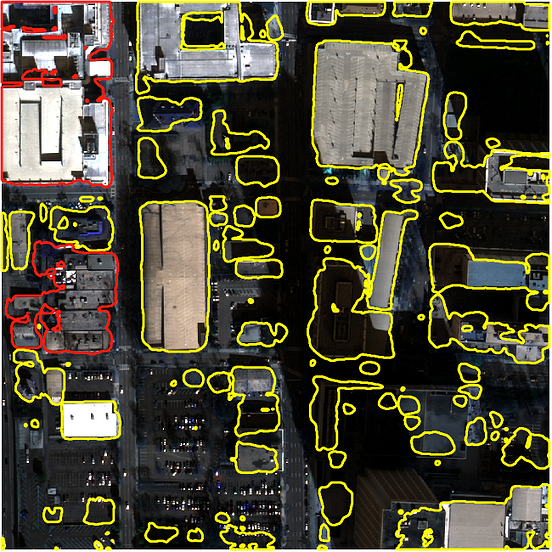

Afterwards, the different methods will be explained and presented together with accompanying results and we will analyze the effect of the depth of the U-Net structure on results. This will be followed by a brief description of the used dataset and the applied evaluation metrics. The next section will review related work with a particular focus on the development of U-Net and Mask-RCNN types of architectures. The different improvements and results for these five approaches are outlined in this paper. Five different adaptations of U-Net and Mask-RCNN based approaches were applied in context of this problem and showed top performance in the segmentation challenge. The top contestants all fall into these two basic categories and both show that they compete on a similar high level. This has been further substantiated by the success of such architectures in this competition and as well for the application in satellite imagery. Mask R-Convolutional Neuronal Networks (CNN) and U-Net type of architectures are currently seen as state-of-the-art for such problems. In general, different architectures for image segmentation have been proposed in the past. A large-scale competition was organized by the challenge platform crowdAI, which released a simplified version (details in Section 3) of the SpaceNet dataset, and attracted 55 participants and 719 submissions. In this work, we focus on the problem of instance segmentation on a simplified version of the SpaceNet dataset, in order to detect buildings in different urban settings on high resolution satellite imagery. This work builds on top of a recently released open dataset, SpaceNet ( v1) ( Spacenet on aws, 2018), which in partnership with Digital Globe, released raw multiband satellite imagery of (as high as) 30 cm resolution for numerous cities like Vegas, Paris, Shanghai, Khartoum, along with the corresponding annotations of buildings and roads. While access to high-performance compute infrastructure has not been an inhibiting factor, access to high-resolution imagery still stays a major inhibiting factor to high quality AI/ML research in satellite imagery and remote sensing. The same does however not hold true for the research community interested in satellite imagery and remote sensing. In this work, we explore how machine learning can help pave the way for automated analysis of satellite imagery to generate relevant and real-time maps.Īpplications of the state-of-the-art results in deep learning have been increasingly accessible to various different domains over the last few years ( LeCun et al., 2015), the main reasons being the advent of end-to-end approaches in deep learning ( LeCun et al., 2015), and the access to vast amounts of openly available data and high performance compute. Today, maps are produced by specialized organizations or in volunteer events such as mapathons, where imagery is annotated with roads, buildings, farms, rivers etc. Satellite imagery is readily available to humanitarian organizations, but translating images into maps is an intensive effort. Long-term and reignited conflicts affect people in many parts of the world, but often, accurate maps of the affected regions either do not exist or are outdated by disaster or conflict. Despite substantial advances in global human well-being, the world continues to experience humanitarian crizes and natural disasters.

0 kommentar(er)

0 kommentar(er)